When you apply for a credit card or a loan, algorithms work in the background to determine financial worthiness. Despite increasing advancements, these are still imperfect due to inherent biases. As data science students and professionals, you’ll inevitably face similar issues relating to biased data sets and should know how to combat them. What are some of the most effective techniques, and why do they matter?

The Critical Issue of Algorithmic Bias in Credit Scoring Models

One of the most concerning aspects of algorithmic bias is the limited recourse available to those negatively impacted, leaving them vulnerable to opaque decision-making processes. This challenge underscores the need for increased transparency in how credit scoring models are developed and deployed. Ultimately, proactive mitigation strategies are essential to ensure fairness and equity in financial outcomes.

One of the biggest issues surrounding algorithmic bias in credit scoring is that adversely affected parties usually have little or no recourse for appealing unfavorable decisions. This problem happens because most widely used algorithms still can’t explain how they reached specific decisions, leaving people in the dark and forcing them to trust the technology, even as it potentially ruins lives.

A November 2025 academic review revealed numerous flaws in financial algorithms and confirmed various impacts. They included systematic disadvantages for minority groups and miscalibrated credit scores for individual borrowers. The researchers also discovered that these issues appeared despite the financial technology industry’s promises of superior efficiency.

One of the cited studies consistently showed that female applicants received credit scores six to eight points lower than their male counterparts. The researchers determined that the associated effects diminished economic welfare and that the ramifications continued for multiple borrowing cycles. Another investigation revealed persistent disparities across minority groups, despite the applicant’s chosen lender type.

Elsewhere, researchers examined the effects of using large language models to evaluate applicants’ loan data. This approach regularly recommended charging higher interest rates to Black applicants or denying their applications. It did not make the same suggestions for identical white applicants. These examples demonstrate why data science professionals must remain constantly aware of the potential for bias and uphold fairness by reducing the issue whenever possible.

Practical Tips for Bias Detection and Mitigation

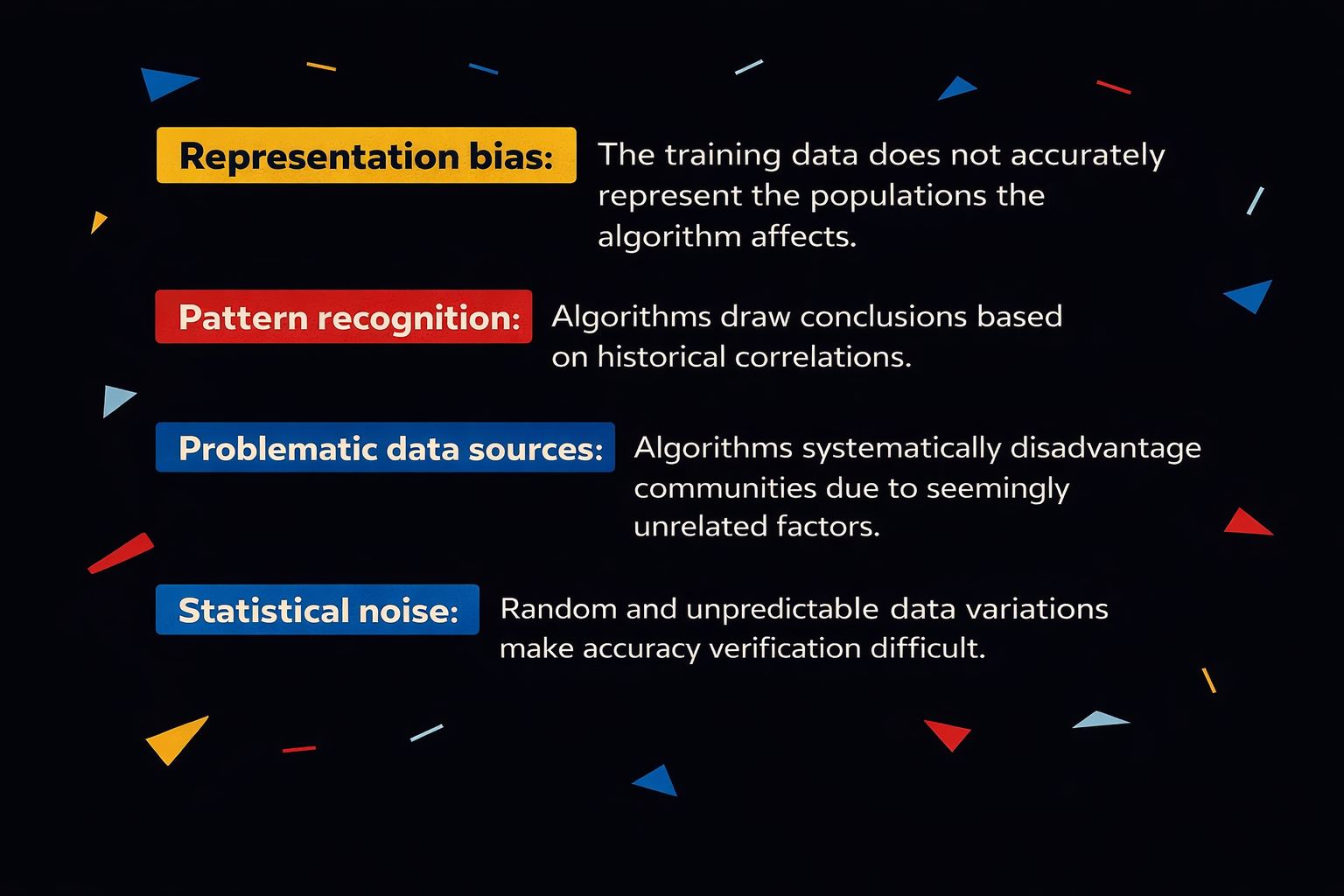

Sources of bias in credit scoring data and algorithms are more common than you might think. They can include:

One straightforward way to identify bias in training data is to be aware of the most common types and build algorithms to be less reliant on them when possible. Regularly reviewing the training data is similarly effective because it can catch biases before they have real-life effects.

You can also perform a disparate impact analysis to mitigate bias, which compares aggregate measurements attributed to fewer privileges or less representation to their counterparts. Dividing the proportion of one group that received an adverse outcome and comparing it with that of the other group identifies bias.

Creating a set of fairness metrics is another practical mitigation approach, as it encourages data scientists to understand the impacts of various aspects of a person’s background that they can and cannot control. The examined attributes could include someone’s employment history, income and debt, but also the extent to which they experienced equal opportunities.

Improvements in explainable artificial intelligence will further both bias detection and mitigation. Developers then see the heavily weighted but irrelevant factors that could lead to unfair outcomes and correct issues early.

Considerations to Reduce Algorithmic Bias in Credit Scoring

Reducing algorithmic bias in credit scoring requires cooperation across roles and departments and includes those who will use the tools containing the algorithms. Committing to specific postprocessing steps empowers people to become more familiar with an algorithm’s functionality and potential shortcomings rather than automatically trusting the results. Those developing the algorithms should prioritize transparency by designing explainable models when possible and making them accessible enough for the expected audience.

Staying abreast of recent research shapes data scientists’ efforts by helping them understand the possibilities. In a 2024 case study, MIT researchers created a new technique that identifies and eliminates the specific attributes of training data that are the strongest contributors to a model’s biases about minority subgroups. This approach also preserves overall accuracy because it preserves more of the data compared to other options.

The developers confirmed that the technique can find hidden bias sources and training datasets with unlabeled information. This capability is significant because the data used by many applications lacks labels. They envision combining their technique with other approaches to improve fairness in high-stakes situations. This detail makes it well-suited for the financial industry because many of the associated decisions alter people’s lives and opportunities.

Maintaining responsible data science practices requires equipping professionals with the skills to detect and mitigate bias in an evolving technological landscape. A 2025 study involved a biased dataset that contained a disproportionately high number of white people with happy faces. This issue caused the AI algorithm to correlate race with emotional expressions.

The results of three experiments with human participants showed that most individuals did not notice the bias. This result shows why data scientists need ongoing education to spot less-obvious examples.

Stay Vigilant to Maintain Fairness

Your work on algorithms for credit scoring could adversely affect people’s lives and leave them with no way to contest unfavorable outcomes. Being a responsible data scientist means understanding the numerous risk factors and the controllable factors to minimize harm. Remaining aware of emerging AI applications in the financial industry and regularly meeting with colleagues to discuss ways forward increases fairness for everyone.

- About the author:

- Devin Partida is a data science and technology writer, as well as the Editor-in-Chief of ReHack.com. Her work has been featured on Hackernoon, TechTarget, DZone and others.

Copyright and licence : © 2026 Devin Partida

This article is licensed under a Creative Commons Attribution 4.0 (CC BY 4.0) International licence.

How to cite :

Partida, Devin. 2026. “Understanding and Addressing Algorithmic Bias: a Credit Scoring Case Study.” Real World Data Science, 2026. URL