Title: Beyond Quantification: Navigating Uncertainty in Professional AI Systems

Author(s) and year: Sylvie Delacroix, Diana Robinson, Umang Bhatt, Jacopo Domenicucci, Jessica Montgomery, Gaël Varoquaux, Carl Henrik Ek, Vincent Fortuin, Yulan He, Tom Diethe, Neill Campbell, Mennatallah El-Assady, Søren Hauberg, Ivana Dusparic12 and Neil D. Lawrence (2025)

Status: Published in RSS: Data Science and Artificial Intelligence, open access: HTML

As artificial intelligence systems—especially large language models (LLMs)—become woven into everyday professional practice, they increasingly influence sensitive decisions in healthcare, education, and law. These tools can draft medical notes, comment on student essays, propose legal arguments, and summarise complex documents. But while AI can now answer many questions confidently, professionals know that confidence is not always what matters most.

Consider a doctor who suspects a patient may be experiencing domestic abuse, or a teacher trying to distinguish between a student’s misunderstanding and a culturally shaped interpretation of a text. These are situations where uncertainty isn’t just about missing data—it’s about interpretation, ethics, and human judgment.

Yet much current AI research focuses on quantifying uncertainty: assigning probability scores, confidence levels, or error bars. The authors of this paper argue that while such numbers help in some cases, they miss the forms of uncertainty that truly matter in professional decision-making. If AI systems rely only on numeric confidence, they risk eroding the very expertise they aim to support.

This paper asks a simple but transformative question: What if uncertainty isn’t always something to quantify, but something to communicate?

Why quantification isn’t enough

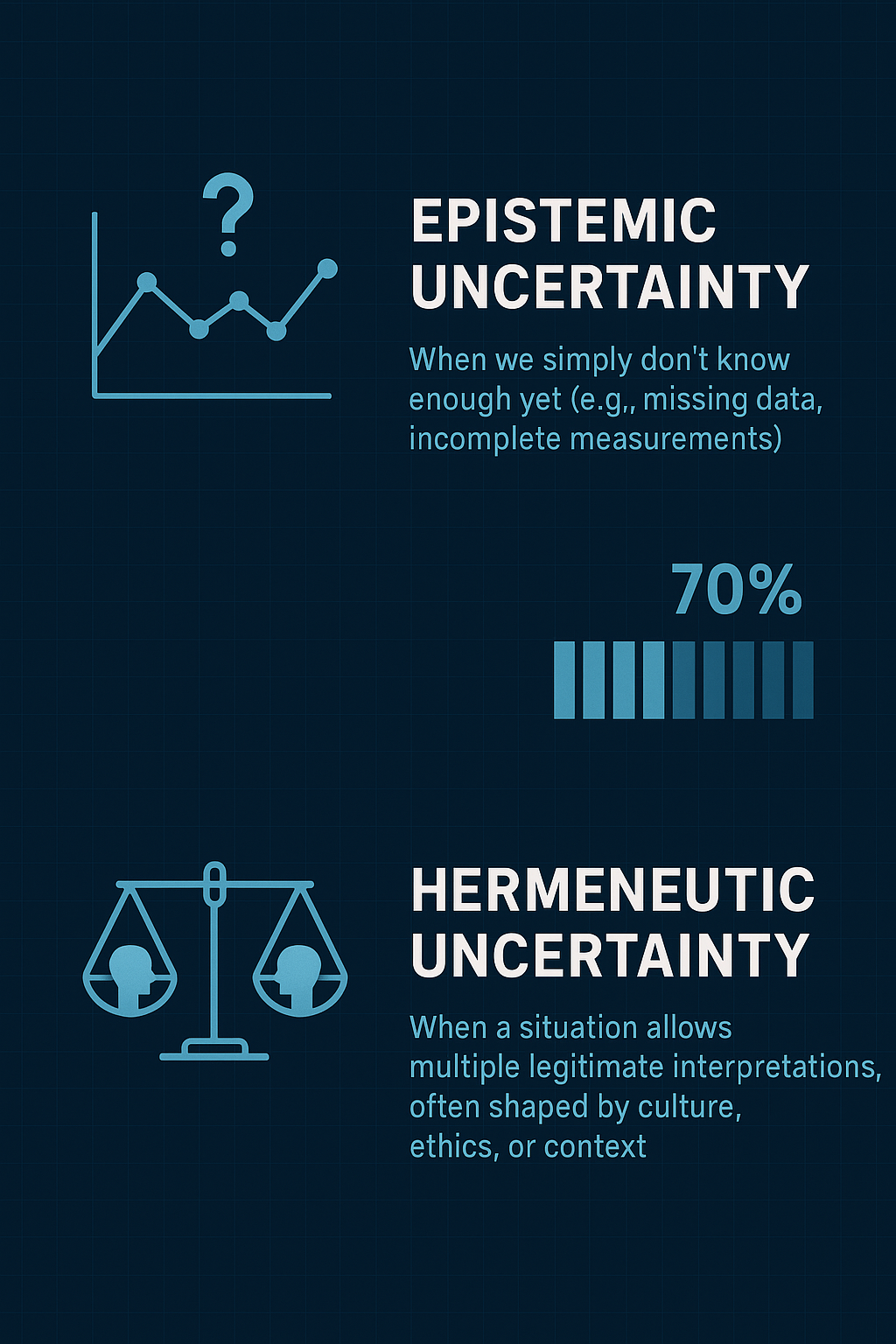

The authors highlight a fundamental mismatch between the way today’s AI systems handle uncertainty and the way real professionals experience it. They distinguish between:

Epistemic uncertainty – when we simply don’t know enough yet (e.g., missing data, incomplete measurements). This can often be quantified.

Hermeneutic uncertainty – when a situation allows multiple legitimate interpretations, often shaped by culture, ethics, or context. This cannot meaningfully be reduced to a percentage.

Professional judgment often depends on this second kind. Teachers, doctors, and lawyers rely on tacit skills: subtle perceptions, ethical intuitions, and context-sensitive interpretation. AI systems trained on statistical patterns struggle to reflect this nuance.

When an AI model gives a probability score — “I’m 70% sure this infection is bacterial” — it communicates something useful. But if the real uncertainty stems from ethical or contextual complexity (e.g., whether asking a patient certain questions might put them at risk), probability scores offer a false sense of clarity.

The paper gives practical examples:

A medical AI might be highly confident about symptoms but blind to the social dynamics suggesting abuse.

An educational AI may accurately flag grammar issues but miss culturally sensitive interpretations in a student essay.

In both cases, the most important uncertainties are precisely the ones that cannot be captured by numbers.

Why this matters now

The authors warn that the problem becomes even more serious as we move toward agentic AI systems—multiple AI agents interacting and making decisions together. If one system miscommunicates uncertainty, the error may ripple through an entire network.

To address this, the authors propose shifting away from trying to algorithmically “solve” uncertainty, and instead enabling professionals themselves to shape how AI expresses it.

Takeaways and implications

1. Uncertainty expression is part of professional expertise, not just a technical feature

AI should not simply output probabilities. It should help preserve and enhance the ways professionals reason through complex, ambiguous situations. That means:

highlighting when interpretation is required

surfacing multiple plausible perspectives

signalling when ethical judgment is involved

encouraging expanded inquiry rather than false certainty

For example, instead of producing a diagnosis score, an AI assistant might say: “This pattern warrants attention to social context. Consider asking open-ended questions to understand the patient’s circumstances.”

This kind of prompting respects and supports professional judgment.

2. Professionals—not engineers—must define how uncertainty is communicated

The authors propose participatory refinement, a process where communities of practitioners (teachers, doctors, judges, etc.) collectively shape:

the categories of uncertainty that matter in their field

the language and formats AI systems should use

how these systems should behave in ethically sensitive scenario

This differs from typical user feedback loops. Instead of individuals clicking “thumbs down,” whole professions deliberate on what kinds of uncertainty an AI system should express and how.

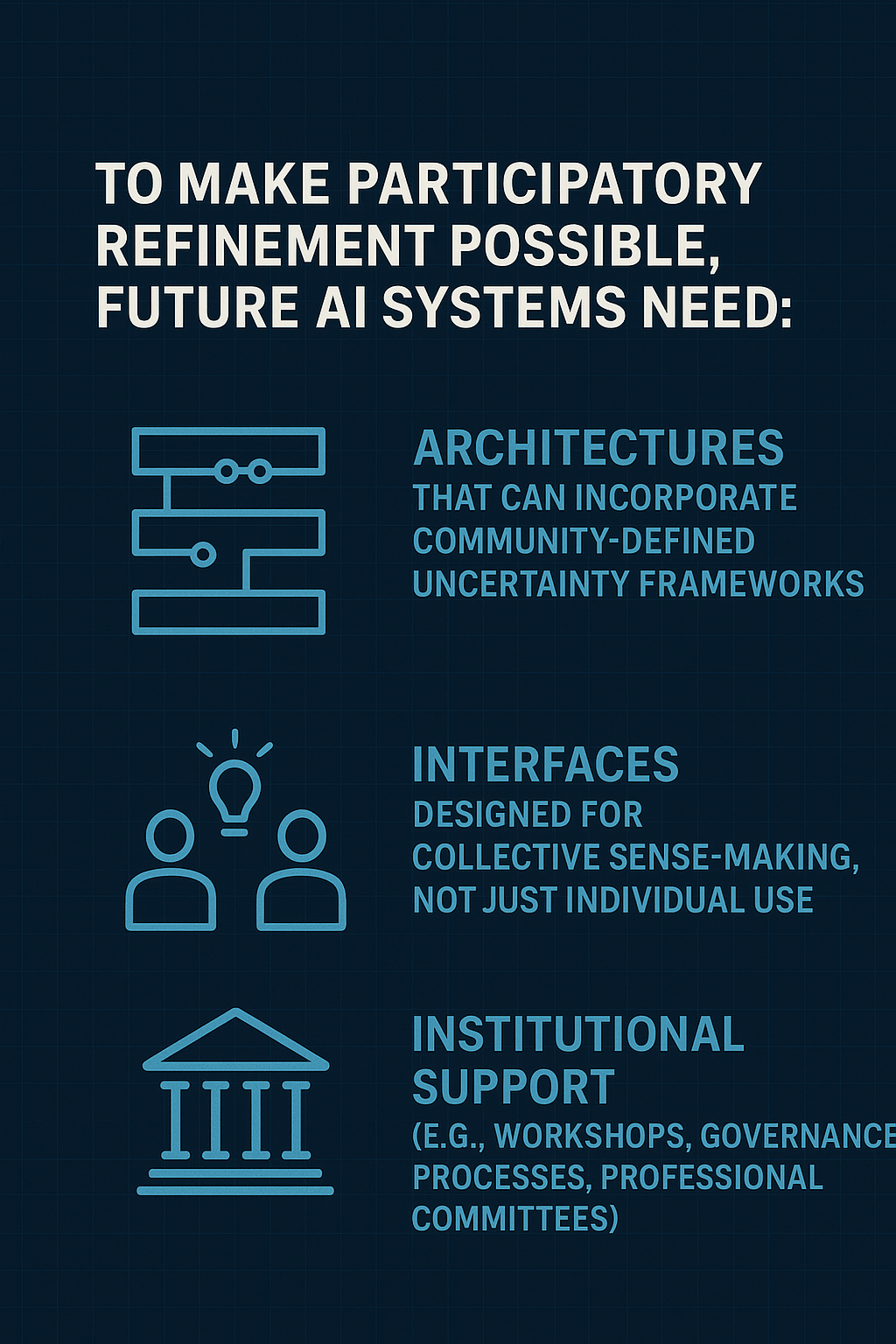

3. This requires new technical and organisational approaches

To make participatory refinement possible, future AI systems need:

architectures that can incorporate community-defined uncertainty frameworks

interfaces designed for collective sense-making, not just individual use

institutional support (e.g., workshops, governance processes, professional committees)

While this takes more time than simply deploying an AI system “out of the box,” the authors argue that in fields like healthcare or law, these deliberative processes are essential, not optional.

4. Preserving “productive uncertainty” is key for ethical, adaptive professional practice

If AI tools flatten complex uncertainty into simple numbers, they may unintentionally narrow the space for professional judgment and ethical debate. The authors suggest that sustained ambiguity—open questions, competing interpretations, ethical reflection—is not a flaw in human reasoning but a feature of high-quality professional work.

Well-designed AI should help maintain that reflective space, not close it down.

Further reading

For readers interested in exploring more:

David Spiegelhalter – The Art of Uncertainty (accessible introduction to uncertainty in science)

Iris Murdoch – The Sovereignty of Good (on moral perception)

Participatory AI frameworks such as STELA (Bergman et al., 2024)

Visual analytics research on human-in-the-loop data interpretation

Discussions of agentic AI systems and coordinated AI in healthcare

Delacroix’s work on LLMs in ethical and legal decision-making

In summary

This paper argues that if AI is to genuinely assist professionals, it must go beyond quantification. Numbers alone cannot capture the ethical, interpretive, and contextual uncertainties that define professional practice. Instead, AI should help preserve and enrich human judgment by communicating uncertainty in ways co-designed with the communities who rely on it. AI should not just be accurate —it should be appropriately uncertain.

- About the author

- Annie Flynn is Head of Content at the RSS.

- About DataScienceBites

- DataScienceBites is written by graduate students and early career researchers in data science (and related subjects) at universities throughout the world, as well as industry researchers. We publish digestible, engaging summaries of interesting new pre-print and peer-reviewed publications in the data science space, with the goal of making scientific papers more accessible. Find out how to become a contributor.